Adobe has announced the upcoming beta release of its AI-generated video tool, Firefly Video Model, later this year. This new development builds on Adobe’s existing Firefly AI models, which began with a text-to-image model last spring.

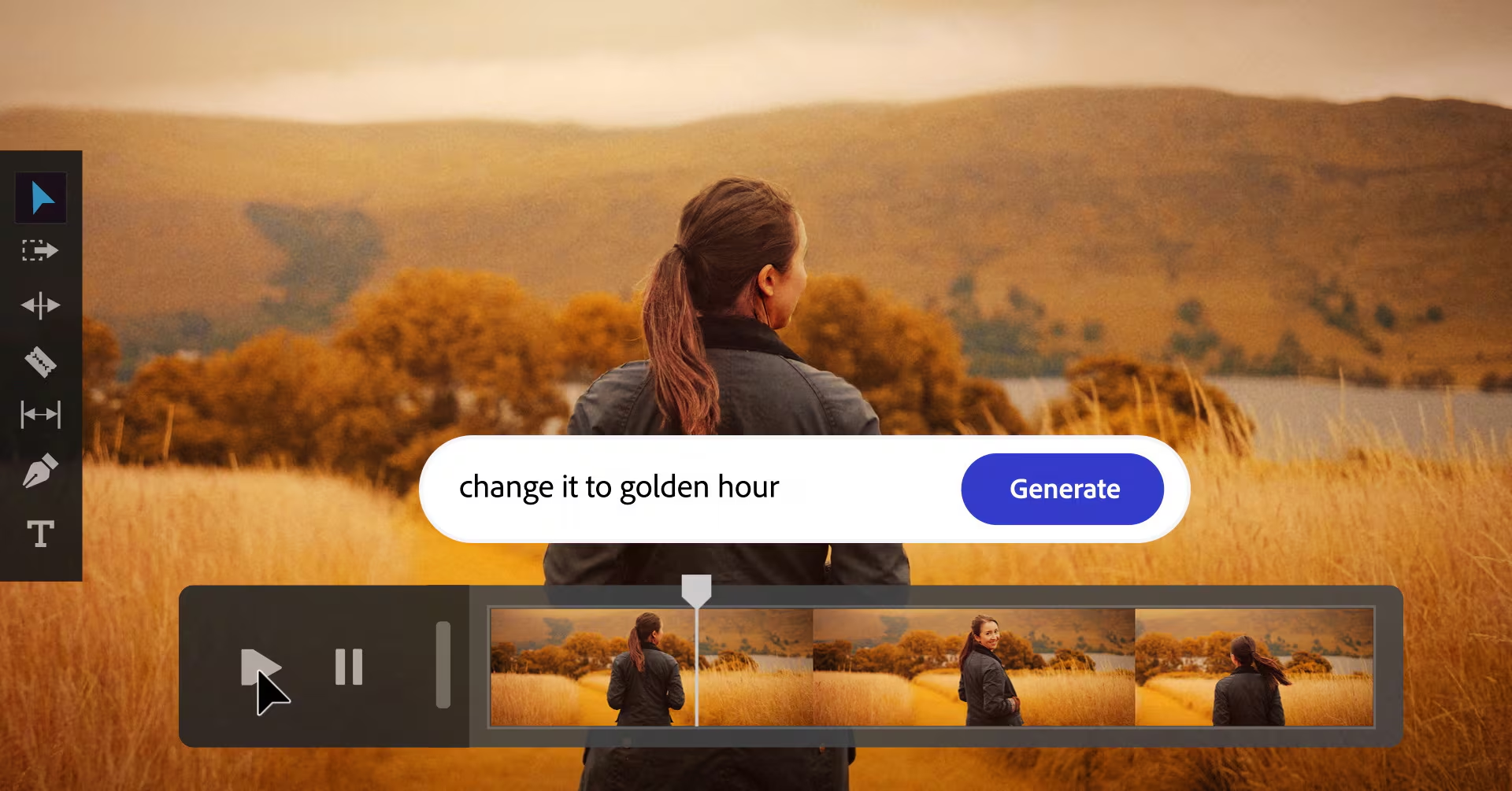

Adobe’s Firefly Video Model aims to revolutionize video editing workflows by enabling creatives and video editors to explore their creative visions more efficiently. According to Ashley Still, Senior Vice-President and General Manager of Adobe’s Creative Product Group, the tool helps editors ideate, fill gaps in their timelines, and add new elements to existing footage.

While AI-generated video is still in its early stages, it holds enormous potential for marketers and creative industries. OpenAI’s Sora model, released to a select group earlier this year, and smaller companies like Runway have made significant progress in this field. However, some early AI-generated video marketing efforts, such as a Toys R Us brand film, faced criticism on social media.

Adobe’s Firefly Video Model differentiates itself by being trained exclusively on the company’s licensed content, addressing concerns about intellectual property infringement. This feature is particularly appealing to marketing teams, as noted by Zeke Koch, Vice-President of Product Management at Adobe Firefly. Marketers are eager to leverage this tool for its capacity to create content without legal concerns.

James Young, Head of Creative Innovation at ad agency BBDO, expressed excitement about Firefly’s potential. While they have experimented with other generative video tools, the unlicensed content in those models limited their use to internal purposes. Firefly’s video tool, however, offers a broader scope for creative exploration.

The integration of Firefly Video into Adobe’s widely-used software suite is expected to encourage more marketers and agencies to experiment with AI-generated video. Jeremy Lockhorn, Senior Vice-President of Creative Technologies and Innovation at the 4A’s, believes these tools will become commonplace in agency workflows as they are incorporated into popular software like Adobe’s.

Firefly Video can generate five-second video clips from text prompts, making it a comprehensive tool for videographers. Ashley Still highlighted the increasing demand for fresh, short-form video content and how AI can help editors meet tight deadlines with high-quality results.

The model excels at generating natural world videos, with examples including an erupting volcano, a desert landscape, and a snow-blanketed forest. It also allows video editors to implement various ‘camera controls’ and create ‘complimentary shots’ from existing footage. One example showed real footage of a girl looking at a dandelion through a magnifying glass, followed by an AI-generated close-up of the flower.

Adobe plans to release a feature called Generative Extend for Premiere Pro later this year, which will enable editors to extend AI-generated clips by two seconds to fill gaps in footage.

Despite the rapid advancements in text-to-video AI models, the technology is still in its infancy, with technical bugs remaining. These models often struggle with depicting text and fine details of human anatomy, and may make unpredictable errors similar to text-generating models like ChatGPT.

Companies like Adobe and OpenAI continue to invest in this technology, believing in its transformative potential for commercial industries like marketing. BBDO’s Young shares this vision, acknowledging the current limitations but emphasizing the tools’ ability to create and scale high-quality content for high-volume media placements. As these tools become more powerful, they will further empower creative professionals to realize their visions at unprecedented speed, cost, and scale.